- Home

- Amit Khandelwal 1

- Subjective Answers

thumb_up 11 thumb_down 1 flag 0

Dynamic programming (usually referred to asDP ) is a very powerful technique to solve a particular class of problems. It demands very elegant formulation of the approach and simple thinking and the coding part is very easy. The idea is very simple, If you have solved a problem with the given input, then save the result for future reference, so as to avoid solving the same problem again.. shortly'Remember your Past' :) . If the given problem can be broken up in to smaller sub-problems and these smaller subproblems are in turn divided in to still-smaller ones, and in this process, if you observe some over-lapping subproblems, then its a big hint for DP. Also, the optimal solutions to the subproblems contribute to the optimal solution of the given problem

thumb_up 2 thumb_down 0 flag 0

thumb_up 52 thumb_down 1 flag 0

Consider 2 buckets one 4L and other 9L. : Bucket 1 (4L) and Bucket2 (9L)

First fill the 9L bucket fully. : 0 L and 9 L

Pour the water into 4L bucket. : 4 L and 5 L

Empty the 4L bucket. : 0 L and 5 L

Repeat this twice. : 4 L and 1 L

Now you will left with 1L water in the 9L bucket : 0 L and 1 L

Now pour this 1L into the 4L bucket : 1 L and 0 L

Refill the 9L bucket. : 1 L and 9 L

Now pour the water from 9L into the 4L bucket until it fills up. : 4 L and 6 L

Now you are left with 6 L water in the 9L bucket.

thumb_up 13 thumb_down 0 flag 0

Self-driving Car

Lung cancer detection system

Face recognition

thumb_up 2 thumb_down 0 flag 0

WCF has a couple of built-in bindings that are designed to fulfill some specific needs. You can also define your own custom bindings in WCF to fulfill your needs. All built-in bindings are defined in the System.ServiceModel Namespace. Here is the list of 10 built-in bindings in WCF that we commonly use.

Basic binding

This binding is provided by the BasicHttpBinding class. It is designed to expose a WCF service as an ASMX web service, so that old clients (that are still using an ASMX web service) can consume the new service. By default, it uses the HTTP protocol for transport and encodes the message in UTF-8 text format. You can also use HTTPS with this binding.

Web binding

This binding is provided by the WebHttpBinding class. It is designed to expose WCF services as HTTP requests using HTTP-GET and HTTP-POST. It is used with REST based services that may provide output in XML or JSON format. This is very much used with social networks for implementing a syndication feed.

Web Service (WS) binding

This binding is provided by the WSHttpBinding class. It is like a basic binding and uses HTTP or HTTPS protocols for transport. But this is designed to offer various WS - * specifications such as WS – Reliable Messaging, WS - Transactions, WS - Security and so on which are not supported by Basic binding.

wsHttpBinding= basicHttpBinding + WS-* specification

WS Dual binding

This binding is provided by the WsDualHttpBinding class. It is like a WsHttpBinding except it supports bi-directional communication which means both clients and services can send and receive messages.

TCP binding

This binding is provided by the NetTcpBinding class. It uses TCP protocol for communication between two machines within intranet (means same network). It encodes the message in binary format. This is a faster and more reliable binding compared to the HTTP protocol bindings. It is only used when the communication is WCF-to-WCF which means both client and service should have WCF.

IPC binding

This binding is provided by the NetNamedPipeBinding class. It uses named pipe for communication between two services on the same machine. This is the most secure and fastest binding among all the bindings.

MSMQ binding

This binding is provided by the NetMsmqBinding class. It uses MSMQ for transport and offers support to a disconnected message queued. It provides solutions for disconnected scenarios in which the service processes the message at a different time than the client sending the messages.

Federated WS binding

This binding is provided by the WSFederationHttpBinding class. It is a specialized form of WS binding and provides support to federated security.

Peer Network binding

This binding is provided by the NetPeerTcpBinding class. It uses the TCP protocol, but uses peer networking as transport. In this networking each machine (node) acts as a client and a server to the other nodes. This is used in the file sharing systems like a Torrent.

MSMQ Integration binding

This binding is provided by the MsmqIntegrationBinding class. It offers support to communicate with existing systems that communicate via MSMQ.

thumb_up 1 thumb_down 1 flag 0

https://www.geeksforgeeks.org/longest-palindromic-substring-set-2/

thumb_up 0 thumb_down 1 flag 0

https://www.geeksforgeeks.org/write-a-c-function-to-print-the-middle-of-the-linked-list/

thumb_up 0 thumb_down 0 flag 0

In WCF, all services expose contracts. Thecontract is a platform-neutral and standard way of describing what the service does. WCF defines four types of contracts.

Service contracts

Describe which operations the client can perform on the service.

Data contracts

Define which data types are passed to and from the service. WCF defines implicit contracts for built-in types such asint andstring, but you can easily define explicit opt-in data contracts for custom types.

Fault contracts

Define which errors are raised by the service, and how the service handles and propagates errors to its clients.

Message contracts

Allow the service to interact directly with messages. Message contracts can be typed or untyped, and are useful in interoperability cases and when there is an existing message format you have to comply with.

thumb_up 29 thumb_down 0 flag 0

Generally there are 2 types of processors, 32-bit and 64-bit.

This actually tells us how much memory a processor can have access from a CPU register.

A 32-bit system can reach around 2^32 memory addresses, i.e 4 GB of RAM or physical memory.

A 64-bit system can reach around 2^64 memory addresses, i.e actually 18-Billion GB of RAM. In short, any amount of memory greater than 4 GB can be easily handled by it.

- To install 64 bit version, you need a processor that supports 64 bit version of OS.

- If you have a large amount of RAM in your machine (like 4 GB or more), only then you can really see the difference between working of 32-bit and 64-bit versions of Windows.

- 64-bit can take care of large amounts of RAM or physical memory more effectively than 32-bit.

- Using 64-bit can help you a lot in multi-tasking. you can easily switch between various applications without any problems or your windows hanging.

- If you're a gamer who only plays High graphical games like Modern Warfare, GTA V, or use high-end softwares like Photoshop or CAD which takes a lot of memory, then you should go for 64-bit Operating Systems. Since it makes multi-tasking with big softwares easy and efficient for users.

You cannot change your OS from 32 bit to 64 bit because they're built on different architecture. You need to re-install a 64 bit version of the OS. 32 bit is also known as x86 or x32. To download 64 bit version look for x64.

thumb_up 15 thumb_down 1 flag 0

This means the CPU can execute 3.3e+9 instructions per second on each core.

CPU clock speed (measured in Hz, KHz, MHz, and GHz) is a handy way to tell the speed of a processor.

thumb_up 2 thumb_down 0 flag 0

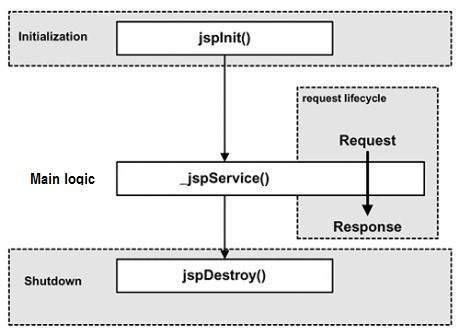

A servlet is simply a class which responds to a particular type of network request - most commonly an HTTP request. Basically servlets are usually used to implement web applications - but there are also various frameworks which operate on top of servlets (e.g. Struts) to give a higher-level abstraction than the "here's an HTTP request, write to this HTTP response" level which servlets provide.

A Servlet is aJava application programming interface (API) running on the server machine which can intercept requests made by the client and can generate/send a response accordingly. A well-known example is the HttpServletwhich provides methods to hook on HTTP requests using the popular HTTP methods such as GETand POST.

Servlets run in aservlet container which handles the networking side (e.g. parsing an HTTP request, connection handling etc). One of the best-known open source servlet containers is Tomcat.

thumb_up 4 thumb_down 0 flag 0

Library:

It is just acollection ofroutines (functional programming) orclass definitions(object oriented programming). The reason behind is simplycode reuse, i.e. get the code that has already been written by other developers. The classes or routines normally definespecific operations in a domain specific area. For example, there are some libraries of mathematics which can let developer just call the function without redo the implementation of how an algorithm works.

Framework:

In framework, all thecontrol flow is already there, andthere are a bunch of predefined white spotsthat we shouldfill out with our code. A framework is normally more complex. Itdefines a skeleton where the application defines its own features to fill out the skeleton. In this way, your code will be called by the framework when appropriately. The benefit is that developers do not need to worry about if a design is good or not, but just about implementing domain specific functions.

thumb_up 5 thumb_down 0 flag 1

ASCII defines 128 characters, which map to the numbers 0–127. Unicode defines (less than) 221characters, which, similarly, map to numbers 0–221 (though not all numbers are currently assigned, and some are reserved).

Unicode is a superset of ASCII, and the numbers 0–128 have the same meaning in ASCII as they have in Unicode. For example, the number 65 means "Latin capital 'A'".

Because Unicode characters don't generally fit into one 8-bit byte, there are numerous ways of storing Unicode characters in byte sequences, such as UTF-32 and UTF-8.

C follows ASCII and Java follows UNICODE.

thumb_up 1 thumb_down 0 flag 0

While logged in as root, we can use ps aux | less command. As the list of processes can be quite long and occupy more than a single screen, the output ofps aux can be piped (transferred) to the less command, which lets it be viewed one screen full at a time. The output can be advanced one screen forward by pressing theSPACE barand one screen backward by pressing theb key.

thumb_up 4 thumb_down 0 flag 0

Permission Groups

Each file and directory has three user based permission groups:

- owner - The Owner permissions apply only the owner of the file or directory, they will not impact the actions of other users.

- group - The Group permissions apply only to the group that has been assigned to the file or directory, they will not effect the actions of other users.

- all users - The All Users permissions apply to all other users on the system, this is the permission group that you want to watch the most.

Permission Types

Each file or directory has three basic permission types:

- read - The Read permission refers to a user's capability to read the contents of the file.

- write - The Write permissions refer to a user's capability to write or modify a file or directory.

- execute - The Execute permission affects a user's capability to execute a file or view the contents of a directory.

We can use the 'chmod' command which stands for 'change mode'. Using the command, we can set permissions (read, write, execute) on a file/directory for the owner, group and the world.Syntax:

chmod permissions filename

The table below gives numbers for all for permissions type:

NumberPermission Type Symbol

0No Permission ---

1Execute --X

2Write -w-

3 Execute + Write -wx

4Read r--

5Read + Execute r-x

6Read +Write rw-

7 Read + Write +Execute rwx

thumb_up 1 thumb_down 0 flag 0

apt-get is the command-line tool for working with APT software packages.

APT (the Advanced Packaging Tool) is an evolution of the Debian .deb software packaging system. It is a rapid, practical, and efficient way to install packages on your system. Dependencies are managed automatically, configuration files are maintained, and upgrades and downgrades are handled carefully to ensure system stability.

thumb_up 1 thumb_down 0 flag 0

http://www.geeksforgeeks.org/puzzle-1-how-to-measure-45-minutes-using-two-identical-wires/

thumb_up 17 thumb_down 0 flag 0

7

The minimum no of races to be held is 7.

Make group of 5 horses and run 5 races. Suppose five groups are a,b,c,d,e and next alphabet is its individual rank in tis group(of 5 horses).for eg. d3 means horse in group d and has rank 3rd in his group. [ 5 RACES DONE ]

a1 b1 c1 d1 e1

a2 b2 c2 d2 e2

a3 b3 c3 d3 e3

a4 b4 c4 d4 e4

a5 b5 c5 d5 e5

Now make a race of (a1,b1,c1,d1,e1).[RACE 6 DONE] suppose result is a1>b1>c1>d1>e1

which implies a1 must be FIRST.

b1 and c1 MAY BE(but not must be) 2nd and 3rd.

FOR II position,horse will be either b1 or a2

(we have to fine top 3 horse therefore we choose horses b1,b2,a2,a3,c1 do racing among them [RACE 7 DONE].the only possibilities are :

c1 may be third

b1 may be second or third

b2 may be third

a2 may be second or third

a3 may be third

The final result will give ANSWER. suppoose result is a2>a3>b1>c1>b2

then answer is a1,a2,a3,b1,c1.

HENCE ANSWER is 7 RACES

thumb_up 1 thumb_down 3 flag 0

The major difference between these two, is that the result of BFS is always a tree, whereas DFS can be a forest (collection of trees). Meaning, that if BFS is run from a node s, then it will construct the tree only of those nodes reachable from s, but if there are other nodes in the graph, will not touch them. DFS however will continue its search through the entire graph, and construct the forest of all of these connected components. This is, as they explain, the desired result of each algorithm in most use-cases.

thumb_up 0 thumb_down 0 flag 0

thumb_up 1 thumb_down 2 flag 0

thumb_up 0 thumb_down 2 flag 0

thumb_up 1 thumb_down 0 flag 0

thumb_up 0 thumb_down 2 flag 0

thumb_up 0 thumb_down 0 flag 0

thumb_up 8 thumb_down 0 flag 0

If you want to turn an existing object into well formatted JSON, you can youJSON.stringify(obj),using the third JSON.stringify argument which represents the space indentation levels:

var formatted = JSON.stringify(myObject, null, 2); Example: /* Result: { "myProp": "GeeksforGeeks", "subObj": { "prop": "JSON DATA" } } */ The resulting JSON representation will be formatted and indented with two spaces!

thumb_up 7 thumb_down 0 flag 0

An n-ary tree is a rooted tree in which each node has no more than n children. It is also sometimes known as a n-way tree, an K-ary tree, or an m-ary tree. A binary tree is the special case where n=2.

thumb_up 2 thumb_down 0 flag 0

thumb_up 7 thumb_down 0 flag 1

A binary tree is a tree where each node can have zero, one or, at most, two child nodes. Each node is identified by a key or id.

A binary search tree is a binary tree which nodes are sorted following the follow rules: all the nodes on the left sub tree of a node have a key with less value than the node, while all the nodes on the right sub tree have higher value and both the left and right subtrees must also be binary search trees.

So, a binary tree can be a binary search tree, if it follows all the properties of a binary search tree.

http://www.geeksforgeeks.org/a-program-to-check-if-a-binary-tree-is-bst-or-not/

thumb_up 0 thumb_down 0 flag 0

An electricity meter, electric meter, electrical meter, or energy meter is a device that measures the amount of electric energy consumed by a residence, a business, or an electrically powered device.

thumb_up 1 thumb_down 0 flag 0

A machine-readable code in the form of numbers and a pattern of parallel lines of varying widths, printed on a commodity and used especially for stock control.

thumb_up 0 thumb_down 1 flag 0

thumb_up 1 thumb_down 0 flag 0

A web container (also known as a servlet container; and compare "webtainer") is the component of a web server that interacts with Java servlets. A web container is responsible for managing the lifecycle of servlets, mapping a URL to a particular servlet and ensuring that the URL requester has the correct access-rights.

thumb_up 1 thumb_down 0 flag 0

The heap memory is the runtime data area from which the Java VM allocates memory for all class instances and arrays. The heap may be of a fixed or variable size. The garbage collector is an automatic memory management system that reclaims heap memory for objects.

-

Eden Space: The pool from which memory is initially allocated for most objects.

-

Survivor Space: The pool containing objects that have survived the garbage collection of the Eden space.

thumb_up 2 thumb_down 0 flag 0

Traceroute works by sending packets with gradually increasing TTL value, starting with TTL value of one. The first router receives the packet, decrements the TTL value and drops the packet because it then has TTL value zero. The router sends an ICMP Time Exceeded message back to the source. The next set of packets are given a TTL value of two, so the first router forwards the packets, but the second router drops them and replies with ICMP Time Exceeded. Proceeding in this way, traceroute uses the returned ICMP Time Exceeded messages to build a list of routers that packets traverse, until the destination is reached and returns an ICMP Echo Reply message.

The timestamp values returned for each router along the path are the delay (latency) values, typically measured in milliseconds for each packet.

The sender expects a reply within a specified number of seconds. If a packet is not acknowledged within the expected interval, an asterisk is displayed. The Internet Protocol does not require packets to take the same route towards a particular destination, thus hosts listed might be hosts that other packets have traversed. If the host at hop #N does not reply, the hop is skipped in the output.

On Unix-line operating systems, traceroute employs User Datagram Protocol (UDP) datagrams by default, with destination port numbers ranging from 33434 to 33534. The traceroute utility usually has an option to instead use ICMP Echo Request (type 8) packets, like the Windows utility tracert does, or to use TCP SYN packets. If a network has a firewall and operates both Windows and Unix-like systems, more than one protocol must be enabled inbound through the firewall for traceroute to work and receive replies.

thumb_up 1 thumb_down 0 flag 0

Open Shortest Path First (OSPF) is a routing protocol for Internet Protocol (IP) networks. It uses a link state routing (LSR) algorithm and falls into the group of interior gateway protocols (IGPs), operating within a single autonomous system (AS). It is defined as OSPF Version 2 in RFC 2328 (1998) for IPv4).

thumb_up 0 thumb_down 0 flag 0

Enhanced Interior Gateway Routing Protocol (EIGRP) is an advanced distance-vector routing protocol that is used on a computer network for automating routing decisions and configuration. The protocol was designed by Cisco Systems as a proprietary protocol, available only on Cisco routers.

thumb_up 2 thumb_down 0 flag 0

DB2 has a mechanism called a cursor. Using a cursor is like keeping your finger on a particular line of text on a printed page.

In DB2, an application program uses a cursor to point to one or more rows in a set of rows that are retrieved from a table. You can also use a cursor to retrieve rows from a result set that is returned by a stored procedure. Your application program can use a cursor to retrieve rows from a table.

You can retrieve and process a set of rows that satisfy the search condition of an SQL statement. When you use a program to select the rows, the program processes one or more rows at a time.

The SELECT statement must be within a DECLARE CURSOR statement and cannot include an INTO clause. The DECLARE CURSOR statement defines and names the cursor, identifying the set of rows to retrieve with the SELECT statement of the cursor. This set of rows is referred to as the result table.

thumb_up 0 thumb_down 0 flag 1

There are changes, which could be performed across domain controllers in Active Directory, using the 'multi-master replication'. However, performing all changes this way may not be practical, and so it must be refined under one domain controller that maneuvers such change requests intelligently. And that domain controller is dubbed as Operations Master, sometimes known as Flexible Single Master Operations (FSMO).

Operations Master role is assigned to one (or more) domain controllers and they are classified as Forest-wide and Domain-wide based on the extent of the role. A minimum of five Operations master roles is assigned and they must appear atleast once in every forest and every domain in the forest for the 'Forest-wide' and 'Domain-wide' roles respectively.

thumb_up 5 thumb_down 0 flag 0

public int sumOfArray(int[] a, int n) { if (n == 0) return a[n]; else return a[n] + sumOfArray(a, n-1); } thumb_up 0 thumb_down 1 flag 0

The only difference is that with module_invoke_all(), for example, func_get_args() is invoked only once, while when using module_invoke() func_get_args() is called each time module_invoke() is called; that is a marginal difference, though.

thumb_up 0 thumb_down 0 flag 0

Name your module

The first step in creating a module is to choose a "short name" for it. This short name will be used in all file and function names in your module, so it must start with a letter, and it must contain only lower-case letters and underscores. For this example, we'll choose "current_posts" as the short name. Important note: Be sure you follow these guidelines and do not use upper case letters in your module's short name, since it is used for both the module's file name and as a function prefix. When you implement Drupal "hooks" (see later portions of tutorial), Drupal will only recognize your hook implementation functions if they have the same function name prefix as the name of the module file.

It's also important to make sure your module does not have the same short name as any theme you will be using on the site.

Create a folder and a module file

Given that our choice of short name is "current_posts" :

- Start the module by creating a folder in your Drupal installation at the path:

-

sites/all/modules/current_postsIn Drupal 6.x and 7.x,

sites/all/modules(orsites/all/modules/contribandsites/all/modules/custom)is the preferred place for non-core moduleswithsites/all/themes(orsites/all/themes/contribandsites/all/themes/custom) for non-core themes. By placing all site-specific files in thesitesdirectory, this allows you to more easily update the core files and modules without erasing your customizations. Alternatively, if you have a multi-site Drupal installation and this module is for only one specific site, you can put it insites/your-site-folder/modules.

-

- Create the info file for the module :

- Save it as

current_posts.infoin the directorysites/all/modules/current_posts - At a minimum, this file needs to contain the following...

name = Current Posts description = Description of what this module does core = 7.x

- Save it as

- Create the PHP file for the module :

- Save it as

current_posts.modulein the directorysites/all/modules/current_posts

- Save it as

- Add an opening PHP tag to the module :

-

<?php - Module files begin with the opening PHP tag. Do not place the CVS ID tag in your module. It is no longer needed with drupal.org's conversion to Git. If the coder module gives you error messages about it, then that module has not yet been updated to drupal.org's Git conventions.

-

thumb_up 5 thumb_down 0 flag 0

Java is a programming language and computing platform first released by Sun Microsystems in 1995. There are lots of applications and websites that will not work unless you have Java installed, and more are created every day. Java is fast, secure, and reliable. From laptops to datacenters, game consoles to scientific supercomputers, cell phones to the Internet.

thumb_up 0 thumb_down 0 flag 0

Simple Solution: The solution is to run two nested loops. Start traversing from left side. For every character, check if it repeats or not. If the character repeats, increment count of repeating characters. When the count becomes k, return the character. Time Complexity of this solution is O(n2)

We can Use Sorting to solve the problem in O(n Log n) time. Following are detailed steps.

1) Copy the given array to an auxiliary array temp[].

2) Sort the temp array using a O(nLogn) time sorting algorithm.

3) Scan the input array from left to right. For every element, count its

occurrences in temp[] using binary search. As soon as we find a characterthat occurs more than once, we return the character.

This step can be done in O(n Log n) time.

An efficient solution is to use Hashing to solve this in O(n) time on average.

- Create an empty hash.

- Scan each character of input string and insert values to each keys in the hash.

- When any character appears more than once, hash key value is increment by 1, and return the character.

See : http://www.geeksforgeeks.org/find-the-first-repeated-character-in-a-string/

thumb_up 1 thumb_down 0 flag 0

CREATE INDEX index_name

ON table_name (column1, column2, ...);

thumb_up 2 thumb_down 0 flag 0

No, Java is not pure object oriented.

Object oriented programming language should only have objects whereas java have int,char,float which are not objects.

As in C++ and some other object-oriented languages, variables of Java's primitive data tyoes are not objects. Values of primitive types are either stored directly in fields (for objects) or on the stacks (for methods) rather than on the heap, as is commonly true for objects. This was a conscious decision by Java's designers for performance reasons. Because of this, Java was not considered to be a pure object-oriented programming language.

thumb_up 0 thumb_down 0 flag 0

JVM is an interpreter which is installed in each client machine that is updated with latest security updates by internet . When this byte codes are executed , the JVM can take care of the security. So, java is said to be more secure than other programming languages.

thumb_up 2 thumb_down 0 flag 0

HTML

Step 1: Let's create our HTML structure.

First, we need a container div, which we'll call ".shopping-cart".

Inside the container, we will have a title and three items which will include:

- two buttons — delete button and favorite button

- product image

- product name and description

- buttons that will adjust quantity of products

- total price

<div class="shopping-cart"> <!-- Title --> <div class="title"> Shopping Bag </div> <!-- Product #1 --> <div class="item"> <div class="buttons"> <span class="delete-btn"></span> <span class="like-btn"></span> </div> <div class="image"> <img src="item-1.png" alt="" /> </div> <div class="description"> <span>Common Projects</span> <span>Bball High</span> <span>White</span> </div> <div class="quantity"> <button class="plus-btn" type="button" name="button"> <img src="plus.svg" alt="" /> </button> <input type="text" name="name" value="1"> <button class="minus-btn" type="button" name="button"> <img src="minus.svg" alt="" /> </button> </div> <div class="total-price">$549</div> </div> <!-- Product #2 --> <div class="item"> <div class="buttons"> <span class="delete-btn"></span> <span class="like-btn"></span> </div> <div class="image"> <img src="item-2.png" alt=""/> </div> <div class="description"> <span>Maison Margiela</span> <span>Future Sneakers</span> <span>White</span> </div> <div class="quantity"> <button class="plus-btn" type="button" name="button"> <img src="plus.svg" alt="" /> </button> <input type="text" name="name" value="1"> <button class="minus-btn" type="button" name="button"> <img src="minus.svg" alt="" /> </button> </div> <div class="total-price">$870</div> </div> <!-- Product #3 --> <div class="item"> <div class="buttons"> <span class="delete-btn"></span> <span class="like-btn"></span> </div> <div class="image"> <img src="item-3.png" alt="" /> </div> <div class="description"> <span>Our Legacy</span> <span>Brushed Scarf</span> <span>Brown</span> </div> <div class="quantity"> <button class="plus-btn" type="button" name="button"> <img src="plus.svg" alt="" /> </button> <input type="text" name="name" value="1"> <button class="minus-btn" type="button" name="button"> <img src="minus.svg" alt="" /> </button> </div> <div class="total-price">$349</div> </div> </div> css

* { box-sizing: border-box; } html, body { width: 100%; height: 100%; margin: 0; background-color: #7EC855; font-family: 'Roboto', sans-serif; } Next, let's style the first item, which is the title, by changing the height to 60px and giving it some basic styling, and the next three items which are the shopping cart products, will make them 120px height each and set them to display flex.

.title { height: 60px; border-bottom: 1px solid #E1E8EE; padding: 20px 30px; color: #5E6977; font-size: 18px; font-weight: 400; } .item { padding: 20px 30px; height: 120px; display: flex; } .item:nth-child(3) { border-top: 1px solid #E1E8EE; border-bottom: 1px solid #E1E8EE; } Now we've styled the basic structure of our shopping cart.

Let's style our products in order.

The first elements are the delete and favorite buttons.

I've always loved Twitter's heart button animation, I think it would look great on our Shopping Cart, let's implement it.

.shopping-cart { width: 750px; height: 423px; margin: 80px auto; background: #FFFFFF; box-shadow: 1px 2px 3px 0px rgba(0,0,0,0.10); border-radius: 6px; display: flex; flex-direction: column; } Next, let's style the first item, which is the title, by changing the height to 60px and giving it some basic styling, and the next three items which are the shopping cart products, will make them 120px height each and set them to display flex.

.title { height: 60px; border-bottom: 1px solid #E1E8EE; padding: 20px 30px; color: #5E6977; font-size: 18px; font-weight: 400; } .item { padding: 20px 30px; height: 120px; display: flex; } .item:nth-child(3) { border-top: 1px solid #E1E8EE; border-bottom: 1px solid #E1E8EE; } Now we've styled the basic structure of our shopping cart.

Let's style our products in order.

The first elements are the delete and favorite buttons.

I've always loved Twitter's heart button animation, I think it would look great on our Shopping Cart, let's implement it.

.buttons { position: relative; padding-top: 30px; margin-right: 60px; } .delete-btn, .like-btn { display: inline-block; Cursor: pointer; } .delete-btn { width: 18px; height: 17px; background: url("delete-icn.svg") no-repeat center; } .like-btn { position: absolute; top: 9px; left: 15px; background: url('twitter-heart.png'); width: 60px; height: 60px; background-size: 2900%; background-repeat: no-repeat; } We set class "is-active" for when we click the button to animate it using jQuery, but this is for the next section.

.is-active { animation-name: animate; animation-duration: .8s; animation-iteration-count: 1; animation-timing-function: steps(28); animation-fill-mode: forwards; } @keyframes animate { 0% { background-position: left; } 50% { background-position: right; } 100% { background-position: right; } } Next, is the product image which needs a 50px right margin.

.image { margin-right: 50px; } Let's add some basic style to product name and description. .description { padding-top: 10px; margin-right: 60px; width: 115px; } .description span { display: block; font-size: 14px; color: #43484D; font-weight: 400; } .description span:first-child { margin-bottom: 5px; } .description span:last-child { font-weight: 300; margin-top: 8px; color: #86939E; } Then we need to add a quantity element, where we have two buttons for adding or removing product quantity. First, make the CSS and then we'll make it work by adding some JavaScript.

.quantity { padding-top: 20px; margin-right: 60px; } .quantity input { -webkit-appearance: none; border: none; text-align: center; width: 32px; font-size: 16px; color: #43484D; font-weight: 300; } button[class*=btn] { width: 30px; height: 30px; background-color: #E1E8EE; border-radius: 6px; border: none; cursor: pointer; } .minus-btn img { margin-bottom: 3px; } .plus-btn img { margin-top: 2px; } button:focus, input:focus { outline:0; } And last, which is the total price of the product.

.total-price { width: 83px; padding-top: 27px; text-align: center; font-size: 16px; color: #43484D; font-weight: 300; } Let's also make it responsive by adding the following lines of code:

@media (max-width: 800px) { .shopping-cart { width: 100%; height: auto; overflow: hidden; } .item { height: auto; flex-wrap: wrap; justify-content: center; } .image img { width: 50%; } .image, .quantity, .description { width: 100%; text-align: center; margin: 6px 0; } .buttons { margin-right: 20px; } } That's it for the CSS.

Java Script

Let's make the heart animate when we click on it by adding the following code:

$('.like-btn').on('click', function() { $(this).toggleClass('is-active'); }); Cool! Now let's make the quantity buttons work.

$('.minus-btn').on('click', function(e) { e.preventDefault(); var $this = $(this); var $input = $this.closest('div').find('input'); var value = parseInt($input.val()); if (value > 1) { value = value - 1; } else { value = 0; } $input.val(value); }); $('.plus-btn').on('click', function(e) { e.preventDefault(); var $this = $(this); var $input = $this.closest('div').find('input'); var value = parseInt($input.val()); if (value < 100) { value = value + 1; } else { value =100; } $input.val(value); And this is our final version.

thumb_up 0 thumb_down 0 flag 0

Android service is a component that isused to perform operations on the background such as playing music, handle network transactions, interacting content providers etc. It doesn't has any UI (user interface).

The service runs in the background indefinitely even if application is destroyed.

Moreover, service can be bounded by a component to perform interactivity and inter process communication (IPC).

The android.app.Service is subclass of ContextWrapper class.

thumb_up 0 thumb_down 0 flag 0

Abroadcast receiver (receiver) is an Android component which allows you to register for system or application events. All registered receivers for an event are notified by the Android runtime once this event happens.

For example, applications can register for theACTION_BOOT_COMPLETED system event which is fired once the Android system has completed the boot process.

Implementation

A receiver can be registered via theAndroidManifest.xml file.

Alternatively to this static registration, you can also register a receiver dynamically via theContext.registerReceiver() method.

The implementing class for a receiver extends theBroadcastReceiver class.

If the event for which the broadcast receiver has registered happens, theonReceive()method of the receiver is called by the Android system.

thumb_up 7 thumb_down 0 flag 0

Algorithm: areRotations(str1, str2)

1. Create a temp string and store concatenation of str1 to str1 in temp. temp = str1.str1 2. If str2 is a substring of temp then str1 and str2 are rotations of each other. Example: str1 = "ABACD" str2 = "CDABA" temp = str1.str1 = "ABACDABACD" Since str2 is a substring of temp, str1 and str2 are rotations of each other. See: http://www.geeksforgeeks.org/a-program-to-check-if-strings-are-rotations-of-each-other/

thumb_up 0 thumb_down 0 flag 0

We can solve this problem by observing some cases, As N needs to be LCM of all numbers, all of them will be divisors of N but because a number can be taken only once in sum, all taken numbers should be distinct. The idea is totake every divisor of N once in sum to maximize the result.

How can we say that the sum we got is maximal sum? The reason is, we have taken all the divisors of N into our sum, now if we take one more number into sum which is not divisor of N, then sum will increase but LCM property will not be hold by all those integers. So it is not possible to add even one more number into our sum, except all divisor of N so our problem boils down to this, given N find sum of all divisors, which can be solved in O(sqrt(N)) time.

So total time complexity of solution will O(sqrt(N)) with O(1) extra space.

// C/C++ program to get maximum sum of Numbers // with condition that their LCM should be N #include <bits/stdc++.h> using namespace std; // Method returns maximum sum f distinct // number whose LCM is N int getMaximumSumWithLCMN(int N) { int sum = 0; int LIM = sqrt(N); // find all divisors which divides 'N' for (int i = 1; i <= LIM; i++) { // if 'i' is divisor of 'N' if (N % i == 0) { // if both divisors are same then add // it only once else add both if (i == (N / i)) sum += i; else sum += (i + N / i); } } return sum; } // Driver code to test above methods int main() { int N = 12; cout << getMaximumSumWithLCMN(N) << endl; return 0; } Output : 28 See : http://www.geeksforgeeks.org/maximum-sum-distinct-number-lcm-n/

thumb_up 1 thumb_down 0 flag 0

thumb_up 0 thumb_down 0 flag 0

thumb_up 18 thumb_down 0 flag 0

He plucked 7 flowers from the garden and 8 he kept in every temple. LOGIC: let the no. of flowers he plucked be 'x' he washed x no. of flowers in the pond =2x let the no. of flowers he kept in each temple be 'y' and that equals=2x-y then he washed 2x-y=2(2x-y)=4x-2y Then again he kept y no. of flowers in the 2nd temple =4x-2y-y He again doubled the flowers by washing them in the magical pond=2(4x-2y-y)=8x-4y-2y and then he again kept y no.of flowers in the 3rd temple So,8x-4y-2y-y=0 as he is left with nothing. so that will be 8x-(4y-2y-y)=0=8x-7y=0 =8x+0=7y As 0 has no value it will be cut So, 8x=7y as 7 and 8 are co-prime,they will be multiplied by each other. =8x=56=7y so x becomes 7 and y becomes 8 =7=x=no. of flowers plucked =8=y=no. of flowers kept in each temple

thumb_up 0 thumb_down 0 flag 0

If it is convex, a trivial way to check it is that the point is laying on the same side of all the segments (if traversed in the same order).

You can check that easily with the cross product (as it is proportional to the cosine of the angle formed between the segment and the point, those with positive sign would lay on the right side and those with negative sign on the left side).

thumb_up 1 thumb_down 0 flag 0

Method 1 (Use Sorting)

1) Sort both strings

2) Compare the sorted strings

Method 2 (Count characters)

This method assumes that the set of possible characters in both strings is small. In the following implementation, it is assumed that the characters are stored using 8 bit and there can be 256 possible characters.

1) Create count arrays of size 256 for both strings. Initialize all values in count arrays as 0.

2) Iterate through every character of both strings and increment the count of character in the corresponding count arrays.

3) Compare count arrays. If both count arrays are same, then return true.

thumb_up 0 thumb_down 0 flag 0

A type expresses that a value has certain properties. A class or interface groups those properties so you can handle them in an organized, named way. You relate a type you're defining to a class using inheritance, but this is not always (and in fact usually is not) how you want to relate two types. Your type may implement any number of interfaces without implying a parent/child relationship with them.

An interface therefore provides a way other than subtyping to relate types. It lets you add an orthogonal concern to the type you're defining, such as Comparable or Closeable. These would be almost unusable if they were classes (one reason is because Java would only allow you to extend that class; and subtyping forces hierarchy, access to unrelated members, and other likely unwanted things).

Historically an interface is somewhat less useful than a trait (e.g. in Scala) because you cannot add any implementation.

thumb_up 4 thumb_down 0 flag 0

HTML and CSS are complimentary to the creation and design of a web document.

HTML (Hyper Text Markup Language)

HTML creates the bones of the document.

HTML can exist without CSS.

CSS (Cascading Style Sheets)

CSS changes the way HTML looks.

CSS can be placed separately or directly within HTML.

Example of HTML:

<p>The p tag defines a paragraph.</p> <p>With a few exceptions, all HTML tags require an opening and closing tag.</p> Example of CSS:

CSS used separately from HTML:

At the top of the document, the CSS can be contained within its own special section. In this example, we are choosing to make all HTML paragraph tags appear bolded:

<style> p { font-style: bold; } </style>

CSS used directly within HTML:

The CSS rules can be placed directly within HTML in the document:

<p style="font-style: bold;">The p tag defines a paragraph.</p> <p style="font-style: bold;">The style attribute contained within the tag defines CSS rules chosen from a pre-defined list of commands (such as 'font-style').</p> thumb_up 3 thumb_down 0 flag 0

#include<stdio.h> is a statement which tells the compiler to insert the contents of stdio at that particular place. In C/C++ we use some functions like printf(), scanf(), cout,cin. These functions are not defined by the programmer. These functions are already defined inside the language library.

thumb_up 6 thumb_down 0 flag 0

stdio stands for "standard input output".

Now say printf is a function. When you use it, its code must be written somewhere so that you directly use it. "stdio.h" is a header file, where this and other similar functions are defined. stdio.h stands for standard input output header C standard library header files.

thumb_up 2 thumb_down 2 flag 0

Number has 51 digits, so the last digit's got to be 1.

625 x 16 = 10000. so any multiple of 10000 is divisible by 625. last four digits are 4141.

(2500 + 1250) = 3750 divisible by 625, leaving a remainder of 391.

thumb_up 0 thumb_down 0 flag 0

Flowchart is the diagrammatic representation of an algorithm with the help of symbols carrying certain meaning. Using flowchart, we can easily understand a program. Flowchart is not language specific. We can use the same flowchart to code a program using different programming languages. Though designing a flowchart helps the coding easier, the designing of flowchart is not a simple task and is time consuming.

thumb_up 0 thumb_down 0 flag 0

Algorithm is a tool a software developer uses when creating new programs. An algorithm is a step-by-step recipe for processing data; it could be a process an online store uses to calculate discounts, for example.

thumb_up 0 thumb_down 3 flag 0

Debugging run-time errors can be done by using 2 general techniques:

Technique 1:

Narrow down where in the program the error occurs.

Technique 2:

Get more information about what is happening in the program.

One way to implement both of these techniques is to print the provided information while the program is running.

thumb_up 2 thumb_down 0 flag 0

A compiler is a computer program (or a set of programs) that transforms source code written in a programming language (the source language) into another computer language (the target language), with the latter often having a binary form known as object code.

thumb_up 0 thumb_down 0 flag 0

It is a mechanism allowing you to ensure the authenticity of an assembly. It allows you to ensure that an assembly hasn't been tampered. It is also necessary if you want to put them into the GAC.

thumb_up 0 thumb_down 1 flag 0

Private Assembly:

- Private assembly can be used by only one application.

- Private assembly will be stored in the specific application's directory or sub-directory.

- There is no other name for private assembly.

- Strong name is not required for private assembly.

- Private assembly doesn't have any version constraint.

- Public Assembly:

- Public assembly can be used by multiple applications.

- Public assembly is stored in GAC (Global Assembly Cache).

- Public assembly is also termed as shared assembly.

- Strong name has to be created for public assembly.

- Public assembly should strictly enforce version constraint.

thumb_up 0 thumb_down 0 flag 0

To put some form of hierarchical efficiency into IP addressing.

That said, the details don't matter because the lack of IPv4 address space required the abandonment of classes to better utilize the limited address space.

Before 1992, it was easy to determine the subnet size simply by looking at the first few bits of an IP address which identified it's class.

thumb_up 0 thumb_down 0 flag 0

Object-Orientation is all about objects that collaborate by sending messages.

Java has the following features which make it object oriented:

- Objects sending message to other objects.

- Everything is an object.

- Subtype polymorphism

- Encapsulation

- Data hiding

- Inheritance

- Abstraction

thumb_up 0 thumb_down 4 flag 0

A surrogate key is any column or set of columns that can be declared as the primary key instead of a "real" or natural key. Sometimes there can be several natural keys that could be declared as the primary key, and these are all called candidate keys. So a surrogate is a candidate key.

thumb_up 0 thumb_down 1 flag 0

SELECT emp_id, emp_name FROM Employee WHERE group=A;

thumb_up 0 thumb_down 0 flag 0

Take two arrays and separate alphabets and numeric values and sort both the arrays.

thumb_up 0 thumb_down 0 flag 0

thumb_up 0 thumb_down 0 flag 0

class GfG{ public static void main(String[] args) { int c=0,a,temp; int n=153;//It is the number to check armstrong temp=n; while(n>0) { a=n%10; n=n/10; c=c+(a*a*a); } if(temp==c) System.out.println("armstrong number"); else System.out.println("Not armstrong number"); } } thumb_up 0 thumb_down 1 flag 0

public : it is a access specifier that means it will be accessed by publicly. static : it is access modifier that means when the java program is load then it will create the space in memory automatically. void : it is a return type i.e it does not return any value. main() : it is a method or a function name

thumb_up 0 thumb_down 0 flag 0

In cryptography, encryption is the process of encoding a message or information in such a way that only authorized parties can access it. Encryption does not of itself prevent interference, but denies the intelligible content to a would-be interceptor. In an encryption scheme, the intended information or message, referred to a plaintext, is encrypted using an encryption algorithm, generating ciphertext that can only be read if decrypted. For technical reasons, an encryption scheme usually uses a pseudo-random encryption key generated by an algorithm. It is in principle possible to decrypt the message without possessing the key, but, for a well-designed encryption scheme, considerable computational resources and skills are required. An authorized recipient can easily decrypt the message with the key provided by the originator to recipients but not to unauthorized users.

Some of the encryption algorithms are:

- AES: Advance Encryption Standards

- RSA

- Blowfish

thumb_up 2 thumb_down 0 flag 0

Selenium is a portable software-testing framework for web application. Selenium provides a record/playback tool for authoring tests without the need to learn a test scripting language(Selenium IDE). It also provides a test domain specific language (Selenese) to write tests in a number of popular programming languages, including C#, Java, Ruby, Php and Scala/ The tests can then run against most modern web browsers. Selenium deploys on Windows, Linux and OS X platforms. It is open source software, released under the Apache 2.0 license web developers can download and use it without charge.

thumb_up 0 thumb_down 1 flag 0

The Digital Signature Algorithm (DSA) is a Federal Information Processing Standard for digital signatures. In August 1991 the National Institute of Standards and Technology (NIST) proposed DSA for use in their Digital Signature Standard (DSS) and adopted it as FIPS 186 in 1993.

thumb_up 0 thumb_down 0 flag 0

cmd -> ipconfig

thumb_up 0 thumb_down 0 flag 0

You could create db.js:

var mysql = require('mysql'); var connection = mysql.createConnection({ host : '127.0.0.1', user : 'root', password : '', database : 'chat' }); connection.connect(function(err) { if (err) throw err; }); module.exports = connection; Then in your app.js, you would simply require it.

var express = require('express'); var app = express(); var db = require('./db'); app.get('/save',function(req,res){ var post = {from:'me', to:'you', msg:'hi'}; db.query('INSERT INTO messages SET ?', post, function(err, result) { if (err) throw err; }); }); server.listen(3000); This approach allows you to abstract any connection details, wrap anything else you want to expose and require db throughout your application while maintaining one connection to your db thanks to how node require works :)

thumb_up 0 thumb_down 0 flag 0

A directive is essentially a function† that executes when the Angular compiler finds it in the DOM. The function(s) can do almost anything, which is why I think it is rather difficult to define what a directive is. Each directive has a name (like ng-repeat, tabs, make-up-your-own) and each directive determines where it can be used: element, attribute, class, in a comment.

A directive normally only has a (post)link function. A complicated directive could have a compile function, a pre-link function, and a post-link function.

thumb_up 1 thumb_down 0 flag 0

The difference between factory and service is just like the difference between a function and an object

Factory and Service is a just wrapper of a provider.

Factory

Factory can return anything which can be a class(constructor function), instance of class, string, number or boolean. If you return a constructor function, you can instantiate in your controller.

myApp.factory('myFactory', function () { // any logic here.. // Return any thing. Here it is object return { name: 'Joe' } } Service

Service does not need to return anything. But you have to assign everything in this variable. Because service will create instance by default and use that as a base object.

myApp.service('myService', function () { // any logic here.. this.name = 'Joe'; } When should we use them?

Factory is mostly preferable in all cases. It can be used when you have constructor function which needs to be instantiated in different controllers.

Service is a kind of Singleton Object. The Object return from Service will be same for all controller. It can be used when you want to have single object for entire application. Eg: Authenticated user details.

thumb_up 0 thumb_down 0 flag 0

$routeProvider is the key service which set the configuration of urls, map them with the corresponding html page or ng-template, and attach a controller with the same.

thumb_up 1 thumb_down 0 flag 0

-

Data-binding − It is the automatic synchronization of data between model and view components.

-

Scope − These are objects that refer to the model. They act as a glue between controller and view.

-

Controller − These are JavaScript functions that are bound to a particular scope.

-

Services − AngularJS come with several built-in services for example $https: to make a XMLHttpRequests. These are singleton objects which are instantiated only once in app.

-

Filters − These select a subset of items from an array and returns a new array.

-

Directives − Directives are markers on DOM elements (such as elements, attributes, css, and more). These can be used to create custom HTML tags that serve as new, custom widgets. AngularJS has built-in directives (ngBind, ngModel...)

-

Templates − These are the rendered view with information from the controller and model. These can be a single file (like index.html) or multiple views in one page using "partials".

-

Routing − It is concept of switching views.

-

Model View Whatever − MVC is a design pattern for dividing an application into different parts (called Model, View and Controller), each with distinct responsibilities. AngularJS does not implement MVC in the traditional sense, but rather something closer to MVVM (Model-View-ViewModel). The Angular JS team refers it humorously as Model View Whatever.

-

Deep Linking − Deep linking allows you to encode the state of application in the URL so that it can be bookmarked. The application can then be restored from the URL to the same state.

-

Dependency Injection − AngularJS has a built-in dependency injection subsystem that helps the developer by making the application easier to develop, understand, and test.

thumb_up 1 thumb_down 0 flag 0

- Interfaces are more flexible, because a class can implement multiple interfaces. Since Java does not have multiple inheritance, using abstract classes prevents your users from using any other class hierarchy. In general, prefer interfaces when there are no default implementations or state. Java collections offer good examples of this (Map, Set, etc.).

- Abstract classes have the advantage of allowing better forward compatibility. Once clients use an interface, you cannot change it; if they use an abstract class, you can still add behavior without breaking existing code. If compatibility is a concern, consider using abstract classes.

- Even if you do have default implementations or internal state, consider offering an interface and an abstract implementation of it. This will assist clients, but still allow them greater freedom if desired.

thumb_up 1 thumb_down 0 flag 0

The Open Group Architecture Framework (TOGAF) is a framework for enterprise architecture that provides an approach for designing, planning, implementing, and governing an enterprise information technology architecture.

thumb_up 1 thumb_down 0 flag 0

One way is to use a port number other than default port 1433 or a port determined at system startup for named instances. You can use SQL Server Configuration Manager to set the port for all IP addresses listed in the TCP/IP Properties dialog box. Be sure to delete any value for the TCP Dynamic Ports property for each IP address. You might want to disable the SQL Server Browser service as well or at least hide the SQL Server instance so that the Browser service doesn't reveal it to any applications that inquire which ports the server is listening to. (One reason to not disable it would be if you have multiple instances of SQL Server on the host because it "maps" connections to instances.) You can hide an instance in the properties page for the instance's protocol, although this just means that SQL Server won't respond when queried by client applications looking for a list of SQL Server machines. Making these kinds of changes is security by obscurity, which is arguably not very secure and shouldn't be your only security measure. But they do place speed bumps in the path of attackers trying to find an instance of SQL Server to attack.

thumb_up 0 thumb_down 0 flag 0

192.168.0.0/16, 127.0.0.0/8 and 10.0.0.0/8

10.0.0.0 through 10.255.255.255 169.254.0.0 through 169.254.255.255 (APIPA only) 172.16.0.0 through 172.31.255.255 192.168.0.0 through 192.168.255.255

Above are the private IP address ranges.

thumb_up 0 thumb_down 0 flag 0

Windows 2000 and Windows 98 provide Automatic Private IP Addressing (APIPA), a service for assigning unique IP addresses on small office/home office (SOHO) networks without deploying the DHCP service. Intended for use with small networks with fewer than 25 clients, APIPA enables Plug and Play networking by assigning unique IP addresses to computers on private local area networks.

APIPA uses a reserved range of IP addresses (169.254.x .x ) and an algorithm to guarantee that each address used is unique to a single computer on the private network.

APIPA works seamlessly with the DHCP service. APIPA yields to the DHCP service when DHCP is deployed on a network. A DHCP server can be added to the network without requiring any APIPA-based configuration. APIPA regularly checks for the presence of a DHCP server, and upon detecting one replaces the private networking addresses with the IP addresses dynamically assigned by the DHCP server.

thumb_up 0 thumb_down 0 flag 0

Arecurrent neural network (RNN) is a class of artificial neural network where connections between units form a directed cycle. This creates an internal state of the network which allows it to exhibit dynamic temporal behavior. Unlike feedforward neural network, RNNs can use their internal memory to process arbitrary sequences of inputs. This makes them applicable to tasks such as unsegmented connected handwriting recognition or speech recognition.

thumb_up 0 thumb_down 0 flag 0

Alternating Current (AC)

- Alternating current describes the flow of charge that changes direction periodically. As a result, the voltage level also reverses along with the current. AC is used to deliver power to houses, office buildings, etc.

Generating AC

- AC can be produced using a device called an alternator. This device is a special type of

- A loop of wire is spun inside of a magnetic field, which induces a current

- The rotation of the wire can come from any number of means: a wind turbine, a steam turbine, flowing water, and so on.

- Because the wire spins and enters a different magnetic polarity periodically, the voltage and current alternates on the wire.

- Generating AC can be compared to our previous water analogy:

- To generate AC in a set of water pipes, we connect a mechanical crank to a piston that moves water in the pipes back and forth (our "alternating" current).

- Notice that the pinched section of pipe still provides resistance to the flow of water regardless of the direction of flow.

Direct Current (DC)

Direct current is a bit easier to understand than alternating current. Rather than oscillating back and forth, DC provides a constant voltage or current.

Generating DC

DC can be generated in a number of ways:

- An AC generator equipped with a device called a "commutator" can produce direct current

- Use of a device called a "rectifier" that converts AC to DC

- Batteries provide DC, which is generated from a chemical reaction inside of the battery

Using our water analogy again, DC is similar to a tank of water with a hose at the end.

- The tank can only push water one way: out the hose. Similar to our DC-producing battery, once the tank is empty, water no longer flows through the pipes.

thumb_up 0 thumb_down 0 flag 0

In multitasking computer operating systems, adaemon is a computer program hat runs as a background process, rather than being under the direct control of an interactive user. Traditionally, the process names of a daemon end with the letterd, for clarification that the process is, in fact, a daemon, and for differentiation between a daemon and a normal computer program. For example, syslogd is the daemon that implements the system logging facility, and sshd is a daemon that serves incoming SSH connections.

In a Unix environment, the parent process of a daemon is often, but not always, the init process. A daemon is usually either created by a process forking a child process and then immediately exiting, thus causing init to adopt the child process, or by the init process directly launching the daemon. In addition, a daemon launched by forking and exiting typically must perform other operations, such as dissociating the process from any controlling terminal (tty). Such procedures are often implemented in various convenience routines such asdaemon(3) in Unix.

Systems often start daemons at boot time which will respond to network requests, hardware activity, or other programs by performing some task. Daemons such as cron may also perform defined tasks at scheduled times.

thumb_up 0 thumb_down 0 flag 0

Network File System (NFS) is a distributed file system protocol originally developed by Sun Microsystem in 1984, allowing a user on a client computer o access files over a computer network much like local storage is accessed. NFS, like many other protocols, builds on the Open Network Computing Remote Procedure Call(ONC RPC) system. The NFS is an open standard defined in Request For Comments (RFC), allowing anyone to implement the protocol.

thumb_up 0 thumb_down 0 flag 0

using the following command in linux:

ethtool eth0

thumb_up 2 thumb_down 0 flag 0

Determine if the problem is hardware or software.

Is it your installation of the operating system that is causing the lags and lockups, or is it a hardware issue like bad Ram or a blown cap… or bad sectors on a hard drive… or a wonky power supply?

One way to do this, is to boot your computer to a diagnostic OS… The Ultimate Boot CD, for example. Run some tests.

Another way is to remove your hard drive, install a blank one (you are only going to use it for this), and install Windows on it. If everything works great… you've just reduced your possibilities to a bad hard drive, or a bad install of Windows. A few HDD tests, read some S.M.A.R.T.. data, and you'd know whether it was the issue or not.

You could open the case, make sure that all the caps are good, all the connections are tight, that Ram and cards are all seated properly… that the heatsink is on the processor properly, drive data cables are secure and in good order.

If it is a laptop…that doesn't change opening it up and checking it out… it just puts that extra effort at the end of the session. Last resort kind of thing. But I've got prior experience with a bent heat pipe causing a heatsink to hit a case at an angle that caused the whole assembly to lift off a processor just enough when everything was screwed down tight that the unit would lag, hang, and overheat. Took it apart… and it ran fine. That was fun.. simulating reassembly to observe what the cooling rig did under pressure.

Start simple. For me when it is on the bench, I boot to Parted Magic. I read the SMART data, and backup important information. If the drive is to blame, the sooner I can get the important information off the drive, the better. Plus, I don't have to worry about passworded accounts this way. Once I tentatively eliminate the drive as a possibility, I'll boot to UBCD and start down the list. That, or I grab the PC-Doctor flash drive, since the corporate entity I work for purchased them. Our shop has a Toolkit, and it has a PC-Doctor suite running out of a customized WinPE… but I prefer the "official" one. It lights an amber LED when there is an issue, and that makes it easy to spot from across the bench.

Assuming the hardware all checks, I move on to the OS. This is where diagnostics start to lose ground to time and objective. More often than not, when repairing a computer, "fix" means "make it work the way it is supposed to". I know that this seems logical… but sometimes what would fix the problem would take far longer to uncover and implement than just nuking the system with a factory recovery.

Case in point, an older woman with an older HP that had been upgraded to Win10 came in tonight, with the system behaving unpredictably. The hardware checked out, and a scan of the system found some infected files and uncommon alterations. Her lags and locks were, at least potentially, being caused by a dirty system. I could have cleaned it. She only used it for surfing the web. She had nothing she wanted to keep (I asked), and she was OK with my performing a factory recovery. I didn't actually "fix" the issue. I obliterated it, and rebuilt Windows on top of the smoking remains.

Now, there is a diagnostic reason for this as well. A clean install of Windows can fix a great many issues, large and small. In fact, it is probably the most common piece of advice (Reinstall Windows) behind, "Check your drivers" and "Reboot the computer." A clean install of Windows can also help reveal issues, without OTHER issues getting in the way… which is why I opened this with the Hard Drive Swap Gambit… putting another hard drive in the machine and installing Windows, and seeing how the system operates. You get to see if that would fix the issue, without touching the issue. Then, you can work backwards.

There have been times that a customer has come in, with a computer that has been in their shop for a decade or two… it would have been upgraded, and upgraded, and their inventory control software can't be reinstalled anymore, because the company that made it doesn't exist… or something similar. So Windows can't be reinstalled. However… the average user? Nope. Back up the data, and nuke it.

Many times it happens due to server heavy load, deadlocks or some process may hanged. I will first monitor the database threads if anything sleeping long time or taking too much of time - I will kill the process from database server.

Secondly, will check the page by applying start time and end time between methods to analyse which part is taking too long time to responds back to client request - I will find out and optimize this.

Look up maximum threads for server both web server and data base server - I will try to increase the threads connections

thumb_up 2 thumb_down 0 flag 0

Web Sockets is a next-generation bidirectional communication technology for web applications which operates over a single socket and is exposed via a JavaScript interface in HTML 5 compliant browsers.

Once you get a Web Socket connection with the web server, you can send data from browser to server by calling a send() method, and receive data from server to browser by an onmessage event handler.

Following is the API which creates a new WebSocket object.

var Socket = new WebSocket(url, [protocal] );

Here first argument, url, specifies the URL to which to connect. The second attribute, protocol is optional, and if present, specifies a sub-protocol that the server must support for the connection to be successful.

thumb_up 3 thumb_down 0 flag 0

thumb_up 0 thumb_down 0 flag 0

Dynamic Host Configuration Protocol (DHCP)

DHCP is a network protocol for assigning IP address to computer's.

Suppose you are in college or employee of any company, then the IP address which show is of internet connection of that company or school router. But if there 100 PC's will be connected from that one connection then you have to assign sub Ip to all those PC's it can be done (automatically) by DHCP protocol.

As an example if you and your family member are using the wifi of house you all will be having different IP's such as

125.80.0.1 125.80.0.5

these are different IP's although you are using the same wifi, this distribution of IP's is done by DHCP automatically.

Advantages

- DHCP also keeps track of computers connected to the network and prevents more than one computer from having the same IP address.

- Automatic allocation of IP's in a range in a network automatically.

Disadvantage

- Security Issues :- If a rogue DHCP server is introduced to the network. A rough offer IP addresses to user connecting to the network, hence information sent over the network can be intercepted, violating privacy and network security.

thumb_up 0 thumb_down 0 flag 0

(x>1) -> right shift will multiply the number by 2.

thumb_up 2 thumb_down 0 flag 0

Latches are level trigerred.

i.e output <-- input when clk = 1

Flip flops are edge triggered. i.e output <-- input when clk transitions from 0 to 1

Flip-flops can be implementedas two back-to-back latches driven by complementary clock phases. The first latch is driven by positive phase while the second latch is driven by negative phase

thumb_up 0 thumb_down 0 flag 0

Yes, definitly we can declare a class inside an interface.

example:-

Interface I

{

class GfG

{

void m1(){

System.out.println("inside m1");

}

void m2(){

System.out.println("inside m2");

}

}

}

Class Test{

public static void main(String args[])

I.GfG ia = new I.GfG();

ia.m1();

ia.m2();

}

In this case, the inner class is becoming as static inner class inside interface where we can access the members of inner class like we do in case of static inner class.

thumb_up 1 thumb_down 0 flag 0

What is Good about Linux:

- Really fast (modest laptop - 4GB RAM)

- Easy to update

- Fast browsing (SO MUCH BETTER THAN WINDOWS 10)

- No need for much security

- Advanced desktop (I use MATE/Compiz - love it)

- Transparent System

Bad about Linux:

- No MS Office {Huge problem)

- Other non-existent software (Photoshop, Dreamweaver)

- Fragmented user design - this is a good thing but it makes every distribution a learning curve

- Developers's GUI vision and kernel vision are not integrated (as I see it)

- Gaming is Bad (I hear)

Good about Windows:

- Decent performance

- MS Office (this is the main reason I need Windows)

- Gaming (I personally don't)

Bad about Windows

- Cost

- Performance (terrible - browser [Chrome] performance on Windows 10 is a huge problem)

- Regressive behavior of the user interface with no real way to change it

- Opaque System

thumb_up 0 thumb_down 1 flag 0

- Distance Vector routing protocols are based on Bellma and Ford algorithms.

- Distance Vector routing protocols are less scalable such as RIP supports 16 hops and IGRP has a maximum of 100 hops.

- Distance Vector are classful routing protocols which means that there is no support of Variable Length Subnet Mask (VLSM) and Classless Inter Domain Routing (CIDR).

- Distance Vector routing protocols uses hop count and composite metric.

- Distance Vector routing protocols support dis-contiguous subnets.

- Common distance vector routing protocols include: Appletalk RTMP, IPX RIP, IP RIP, IGRP.

thumb_up 0 thumb_down 0 flag 0

- Distance Vector routing protocols are based on Bellma and Ford algorithms.

- Distance Vector routing protocols are less scalable such as RIP supports 16 hops and IGRP has a maximum of 100 hops.

- Distance Vector are classful routing protocols which means that there is no support of Variable Length Subnet Mask (VLSM) and Classless Inter Domain Routing (CIDR).

- Distance Vector routing protocols uses hop count and composite metric.

- Distance Vector routing protocols support dis-contiguous subnets.

- Common distance vector routing protocols include: Appletalk RTMP, IPX RIP, IP RIP, IGRP

Common distance vector routing protocols include:

- Appletalk RTMP

- IPX RIP

- IP RIP

- IGRP

thumb_up 0 thumb_down 0 flag 0

WiMAX (Worldwide Interoperability for Microwave Access) is a wireless industry coalition dedicated to the advancement of IEEE 802.16 standards for broadband wireless access (BWA) networks.

WiMAX supports mobile, nomadic and fixed wireless applications. A mobile user, in this context, is someone in transit, such as a commuter on a train. A nomadic user is one that connects on a portable device but does so only while stationary -- for example, connecting to an office network from a hotel room and then again from a coffee shop.

thumb_up 0 thumb_down 0 flag 0

While C# has a set of capabilities similar to Java, it has added several new and interesting features. Delegation is the ability to treat a method as a first-class object. A C# delegate is used where Java developers would use an interface with a single method.

Delegates, represent methods that are callable without knowledge of the target object.

thumb_up 0 thumb_down 0 flag 0

thumb_up 0 thumb_down 0 flag 0

We can do this using external sorting. What we have to do for this is, sort small chunks of data first, write it back to the disk and then iterate over those to sort all.

thumb_up 0 thumb_down 1 flag 0

Priority Queue is similar to queue where we insert an element from the back and remove an element from front, but with a one difference that the logical order of elements in the priority queue depends on the priority of the elements. The element with highest priority will be moved to the front of the queue and one with lowest priority will move to the back of the queue. Thus it is possible that when you enqueue an element at the back in the queue, it can move to front because of its highest priority.

Example:

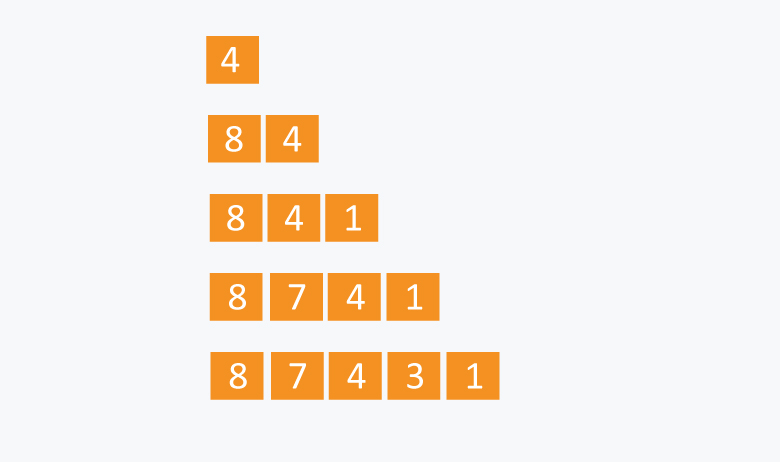

Let's say we have an array of 5 elements : {4, 8, 1, 7, 3} and we have to insert all the elements in the max-priority queue.

First as the priority queue is empty, so 4 will be inserted initially.

Now when 8 will be inserted it will moved to front as 8 is greater than 4.

While inserting 1, as it is the current minimum element in the priority queue, it will remain in the back of priority queue.

Now 7 will be inserted between 8 and 4 as 7 is smaller than 8.

Now 3 will be inserted before 1 as it is the 2nd minimum element in the priority queue. All the steps are represented in the diagram below:

We can think of many ways to implement the priority queue.

Naive Approach:

Suppose we have N elements and we have to insert these elements in the priority queue. We can use list and can insert elements in O(N) time and can sort them to maintain a priority queue in O(N logN ) time.

Efficient Approach:

We can use heaps to implement the priority queue. It will take O(log N) time to insert and delete each element in the priority queue.

Based on heap structure, priority queue also has two types max- priority queue and min - priority queue.

Let's focus on Max Priority Queue.

Max Priority Queue is based on the structure of max heap and can perform following operations:

maximum(Arr) : It returns maximum element from the Arr.

extract_maximum (Arr) - It removes and return the maximum element from the Arr.

increase_val (Arr, i , val) - It increases the key of element stored at index i in Arr to new value val.

insert_val (Arr, val ) - It inserts the element with value val in Arr.

Implementation:

length = number of elements in Arr.

Maximum :

int maximum(int Arr[ ]) { return Arr[ 1 ]; //as the maximum element is the root element in the max heap. } Complexity: O(1)

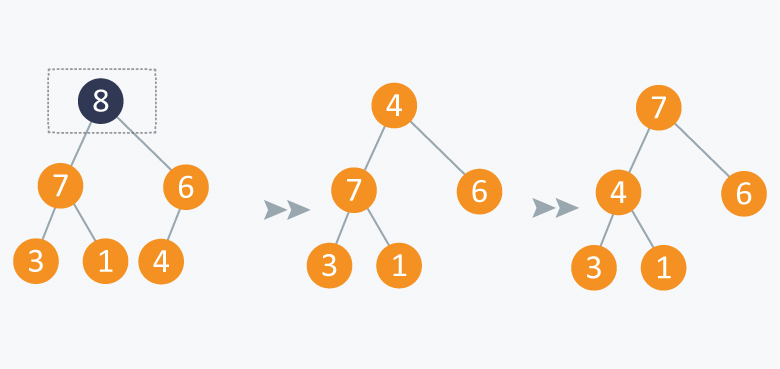

Extract Maximum: In this operation, the maximum element will be returned and the last element of heap will be placed at index 1 and max_heapify will be performed on node 1 as placing last element on index 1 will violate the property of max-heap.

int extract_maximum (int Arr[ ]) { if(length == 0) { cout<< "Can't remove element as queue is empty"; return -1; } int max = Arr[1]; Arr[1] = Arr[length]; length = length -1; max_heapify(Arr, 1); return max; } Complexity: O(logN).

Increase Value: In case increasing value of any node, may violate the property of max-heap, so we will swap the parent's value with the node's value until we get a larger value on parent node.

void increase_value (int Arr[ ], int i, int val) { if(val < Arr[ i ]) { cout<<"New value is less than current value, can't be inserted" <<endl; return; } Arr[ i ] = val; while( i > 1 and Arr[ i/2 ] < Arr[ i ]) { swap|(Arr[ i/2 ], Arr[ i ]); i = i/2; } } Complexity : O(log N).

Insert Value :

void insert_value (int Arr[ ], int val) { length = length + 1; Arr[ length ] = -1; //assuming all the numbers greater than 0 are to be inserted in queue. increase_val (Arr, length, val); } Complexity: O(log N).

Example:

Initially there are 5 elements in priority queue.

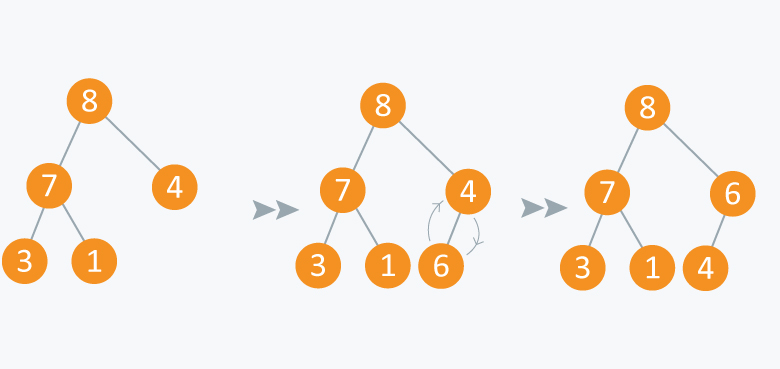

Operation: Insert Value(Arr, 6)

In the diagram below, inserting another element having value 6 is violating the property of max-priority queue, so it is swapped with its parent having value 4, thus maintaining the max priority queue.

Operation: Extract Maximum:

In the diagram below, after removing 8 and placing 4 at node 1, violates the property of max-priority queue. So max_heapify(Arr, 1) will be performed which will maintain the property of max - priority queue.

As discussed above, like heaps we can use priority queues in scheduling of jobs. When there are N jobs in queue, each having its own priority. If the job with maximum priority will be completed first and will be removed from the queue, we can use priority queue's operation extract_maximum here. If at every instant we have to add a new job in the queue, we can use insert_value operation as it will insert the element in O(log N) and will also maintain the property of max heap.

thumb_up 0 thumb_down 0 flag 0

Testing Big Data application is more a verification of its data processing rather than testing the individual features of the software product. When it comes to Big data testing, performance and functional testing are the key.

In Big data testing QA engineers verify the successful processing of terabytes of data using commodity cluster and other supportive components. It demands a high level of testing skills as the processing is very fast. Processing may be of three types

- Batch

- Real Time

- Interactive